Classifying Domain Generation Algorithm Domains with Machine Learning

06:27:2024

Domain Generation Algorithms (DGAs) pose a significant challenge for cybersecurity professionals. These algorithms generate a large number of seemingly random domain names, allowing threat actors to constantly evade detection and establish DNS beacons with compromised networks.

However, the traditional approach of blacklisting domains is ineffective against DGAs, necessitating innovative solutions. Here’s what you need to know.

Identifying the Problem

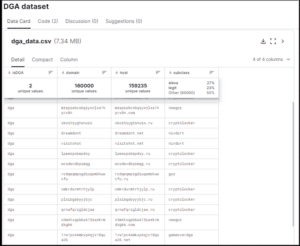

SealingTech engineers used the DGA Dataset from Charles Givre via Kaggle. This dataset contains 160,000 domains labeled as DGA-based or legitimate, along with the specific algorithms used for the DGA domains.

At first glance, detecting DGA domains might seem straightforward, but it’s a complex task for computers. Unlike humans, computers see domain names as mere strings of characters. To tackle this challenge, security experts are turning to machine learning (ML), leveraging advanced algorithms to support computers in recognizing suspicious domain patterns.

In SealingTech’s own research, engineers utilized the DGA Dataset from Charles Givre via Kaggle. This dataset contains 160,000 domains labeled as DGA-based or legitimate, along with the specific algorithms used for the DGA domains.

Moreover, the dataset includes the specific algorithm used to detect DGA domains. This algorithm was instrumental in guiding the classifier when encountering a multi-class classification problem. In those scenarios, the ML model attempts to separate inputs into three or more outputs, as opposed to a binary approach.

Understanding DGA Variability and Using Embedding Models

Not all DGAs are created equal. Some, like Cryptolocker, exhibit seemingly random patterns, while others, like Nivdort, produce human-readable combinations.

Cryptolocker might generate something like jpqftymiuver, whereas Nivdort will join known words together and produce outputs like takenroll.

Recognizing this variability, the SealingTech team categorized DGAs into similar groups, facilitating more accurate classification. This nuanced approach enhances the model’s ability to differentiate between different DGA types.

Traditional feature engineering involves extracting domain features manually, but SealingTech embraced embedding models, which automatically generate fixed-length vectors representing the semantic meaning of domain names. This approach not only streamlines the feature engineering process but also ensures that inputs to the classification model are consistent in length, improving overall accuracy.

Implementing the Model and Evaluating Performance

SealingTech implemented a neural network architecture using PyTorch, with layers tailored to accommodate the embedding model’s fixed-length vectors. Through meticulous training and optimization, our model learned to identify DGA domains with an impressive accuracy of 97%.

During training, engineers tracked the model’s performance over epochs, consistently observing high accuracy and low loss rates. The model’s exceptional performance underscores its efficacy in accurately classifying potentially malicious domain names.

Mechanisms of the Model

The success of SealingTech’s classification model hinges on its intricate mechanisms, combining cutting-edge techniques in ML with meticulous data processing and model architecture. Let’s delve deeper into how it works.

Traditionally, data scientists invest considerable effort in feature engineering to transform raw data into formats suitable for machine learning models. However, recent strides in Natural Language Processing (NLP) have ushered in a new era of embedding models. These models excel at processing text, generating fixed-length vectors that encapsulate the semantic meaning of the input text.

The embedding model’s objective is to ensure that similar domain names are closer together in vector space. By embedding domain names into fixed-length vectors, we eliminate the need for manual feature engineering and provide consistent inputs to our classification model.

Data Preprocessing

SealingTech’s neural network architecture comprises multiple layers, including an input layer with 768 nodes (matching the embedding dimension).

Before feeding any data into the model, ensuring uniformity and balance in the dataset is crucial. SealingTech achieves this by loading the dataset and splitting it equally between legitimate domains and DGA domains. This prevents bias toward any particular class during training.

Furthermore, each domain name is mapped to a numerical value. In our example, legitimate domains are labeled as 0, and Nivdort domains are labeled as 2. All other DGA domain classes are grouped together and assigned the label 1. This strategic grouping simplifies the classification task, focusing on distinguishing legitimate domains from DGA domains rather than predicting specific DGA classes.

Neural Network Architecture

SealingTech’s neural network architecture, implemented using PyTorch, is tailored to accommodate the fixed-length vectors generated by the embedding model. The architecture comprises multiple layers, including an input layer with 768 nodes (matching the embedding dimension), three hidden layers with 64 nodes each, and a final output layer with three nodes corresponding to the three domain classes.

During training, each node in the neural network performs computations on the input data, applying weights and biases to produce an output. These weights and biases are continuously adjusted during training to minimize the error, guided by the chosen loss function.

Training Process

The training process involves iteratively passing inputs through the model, calculating loss, and optimizing model parameters to improve accuracy. Engineers employed an optimizer and loss functions to evaluate the model performance and fine-tune weights accordingly.

Once trained, the model undergoes evaluation on the test data, where the SoftMax function is applied to obtain class probabilities. The accuracy of the model on the test dataset serves as a benchmark for its performance.

Unlock the Power of AI in Cybersecurity

SealingTech’s classification model represents a synergy of advanced ML techniques and meticulous data processing. By integrating embedding models, optimizing neural network architecture, and refining training processes, we have developed a robust solution for identifying DGA domains with high accuracy.

This comprehensive approach underscores our commitment to leveraging AI and ML in combating cybersecurity threats effectively.

Want to learn more about how our innovative solutions can enhance your cybersecurity posture? Reach out to the SealingTech team today to discover the transformative potential of AI in safeguarding your digital assets.

Related Articles

The Call for Explainable AI

Enhancing Network Visibility with Machine Learning Artificial intelligence (AI) and machine learning are transforming business processes across industries. For many organizations, data has become their most valuable asset. The ability…

Unsupervised Learning for Cybersecurity

Dashboards and automated alerts remain well-established fundamental components of nearly every cybersecurity team’s toolbelt. Peel back the layers of a network monitoring tool suite, and you’ll discover that every team…

Operator X: An Intern Experience

SealingTech’s exciting new innovation Operator X is a chat interface built to assist cyber operators by bridging knowledge gaps via the use of cutting-edge generative AI tools and techniques. It…

Could your news use a jolt?

Find out what’s happening across the cyber landscape every month with The Lightning Report.

Be privy to the latest trends and evolutions, along with strategies to safeguard your government agency or enterprise from cyber threats. Subscribe now.