Enhancing Network Visibility with Machine Learning

Artificial intelligence (AI) and machine learning are transforming business processes across industries. For many organizations, data has become their most valuable asset. The ability to make data-driven decisions has gone from a competitive advantage to a necessity. With modern computing resources, data can be processed and models trained at an unprecedented scale and yield new benefits and insights. Still, with rising size, depth, and sophistication comes rising complexity.

Many of the most accurate machine learning models fall within a subgroup of models called “black box models”. These models (or algorithms) provide accurate and useful information but do not reveal their inner workings. Examples of black-box models include any kind of Neural Network, Support Vector machines, and many others. While highly useful, these methods perform their calculations in the background that end users have no insight into, leaving them uninformed as to how their model arrived at its prediction.

For federal agencies and other organizations that operate with much regulation and oversight, this presents a problem for decision makers who need to be able to explain how they arrived at their conclusions. Without the ability to explain model outputs, stakeholders are unable to truly justify decisions made based on machine learning models.

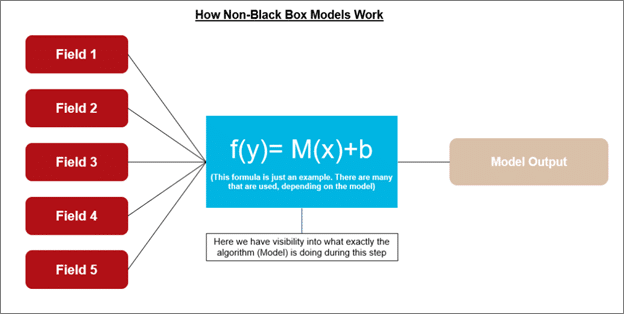

Consider the following diagram to understand how non-black box models work:

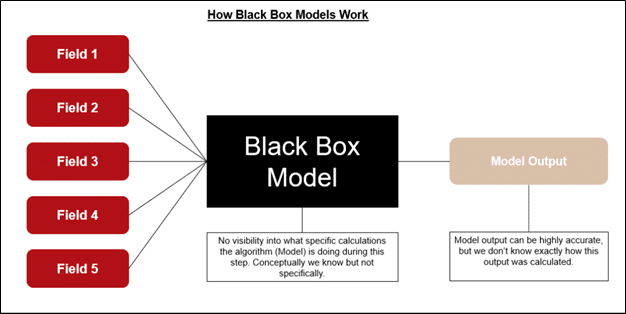

Now observe how black box models operate:

This need for increased visibility into the inner workings of machine learning models has produced a field known as Explainable AI or XAI.

What is XAI?

IBM defines explainable AI (XAI) as a “set of processes and methods that allows human users to comprehend and trust the results and output created by machine learning algorithms.” Explainable AI is used to describe an AI model, its expected impact and potential biases while adding transparency to how individual predictions were generated, and factors contributed most to the output. To get a conceptual understanding of how XAI can be leveraged, consider the following scenario.

You are a data scientist working at a large bank. Your employer would like to start using machine learning as it considers which mortgage loan applications to approve. This will help eliminate bias in loan approvals and increase profits. However, there is a complication with this approach. While advantageous from a profit and risk standpoint, federal regulation requires lenders to provide a written explanation for any loans they turn down. Given this fact, your employer must be able to justify any loan denials, including those based on your machine learning model. To simply say “Our machine learning model predicted that you are likely to go into default, therefore we deny your loan application” would not meet the regulatory standard. Your employer must be able to explain what factors were considered, how they contributed to the model output, and how it shaped their final decision.

Fortunately, you understand explainable AI and can provide your company with information on the model that includes:

- What factors the model weighs the highest

- Which variables contributed the most to an individual conclusion

- How those specific elements each contributed to the conclusion

- How a change in those factors could have altered the decision

XAI In Cybersecurity

As a Systems Engineer at SealingTech, I’ve found that being able to explain machine learning model outputs significantly augments an incident responder’s ability to investigate anomalous behavior on a network. While many models can be used to identify anomalous network traffic, to identify an anomaly but not be able to explain it, is less useful than being able to outline what made a particular behavior anomalous.

While there are many open-source XAI frameworks, The Shapley Value approach stands out as among the most useful. Named after Lloyd Shapley who introduced it in 1951, The Shapley Value borrowed its concept from game theory. It was originally used to understand how each player on a sports team contributed to the result of the competition. By calculating the marginal contribution of each player on the team, Shapley values help us understand how much of a difference each player made. If we view our machine learning model as the game being played, its predictions as the outcome of the game, and the input features as the players, we can apply Shapley Values to understand how each feature helped to shape the model result.

Using the same analogy in cybersecurity, when we monitor network traffic anomalies, the determination of whether findings are anomalous or not can be viewed as the game result and network traffic characteristics as the players. After running our anomaly detections, we can use Shapley Values to determine which network traffic characteristics played the largest role in the network traffic being classified as anomalous.

The SHAP model which stands for Shapley Additive exPlantations helps us interpret machine learning models using the Shapley Value approach. It allows for greater visualization and provides a clearer understanding of model results. This information helps to guide cybersecurity analysts in their investigation of any anomalies detected by a machine learning model.

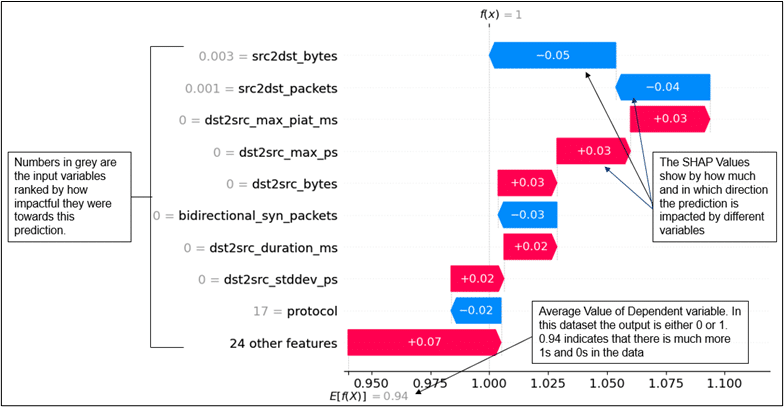

Consider the following visualizations of Shapley Values based on an anomaly detection built with synthetic data:

The SHAP bar plot shows us the top 7 most influential factors in our model. This lets us know, for this model, which fields generally influence predictions the most. In this case, the duration of time the destination IP spent transferring data to the source IP was the most influential factor in its predictions.

Let’s look at another visualization of SHAP values to gain an understanding on a specific observation:

This SHAP waterfall plot explains how different factors contributed to an individual prediction. The prediction itself is shown at the top where f(x)=1. Meaning this observation is not anomalous. This can show us which fields contributed most to this prediction, and whether any individual value made the data seem more anomalous or less anomalous.

This Beeswarm plot shows us how values in the dataset being high or low impacts the SHAP value and ultimately the model prediction.

These are only three examples of the many helpful visuals and interpretations of SHAP values and their accompanying visuals. Using XAI, machine learning models become much more trustworthy because their outputs can be relied upon, understood, explained, and delivered to end users in a visual format. As more organizations seek to leverage AI and machine learning technologies, XAI tools like Shapley Values will become an increasingly important capability to possess.

At SealingTech, our skilled team possesses a combined wealth of knowledge and expertise in the complexities of cybersecurity and anomaly detection. We’re well-versed in developing defense solutions that help our customers secure their networks and systems against malicious attacks. To learn more about explainable AI and achieving greater visibility of your networks, contact our team today.

Related Articles

AI Solutions that Support the Mission: TechNet Indo-Pac

Members of SealingTech’s team attended AFCEA’s TechNet Indo-Pac Conference in Honolulu, Hawaii in October. Its theme: “Free-Open-Secure” focused on the critical issues identified by regional military leaders to maintain and…

Unsupervised Learning for Cybersecurity

Dashboards and automated alerts remain well-established fundamental components of nearly every cybersecurity team’s toolbelt. Peel back the layers of a network monitoring tool suite, and you’ll discover that every team…

Operator X: An Intern Experience

SealingTech’s exciting new innovation Operator X is a chat interface built to assist cyber operators by bridging knowledge gaps via the use of cutting-edge generative AI tools and techniques. It…

Could your news use a jolt?

Find out what’s happening across the cyber landscape every month with The Lightning Report.

Be privy to the latest trends and evolutions, along with strategies to safeguard your government agency or enterprise from cyber threats. Subscribe now.